A solution in search of a problem

What is generative AI good for?

It was only a matter of time before the most recent progression of generative AI creeped into music. Suno, a generative AI music startup, released its text-to-song app last December. The app lets you listen to music generated by others, or enter a text prompt to generate your own. An example prompt they give is “a syncopated house song about a literal banana”. Ah yes, just what I was looking for.

The songs sound… like music, I guess? They certainly are a huge improvement over previous generative AI music systems. But when you play with Suno for a bit longer, you notice some weird things that you would never hear in real music. In many songs, the “chorus” section (labeled by Suno) sounds completely different each time. For all songs with voice, the vocals are distractingly of much lower audio quality than the instrumentals. I also can’t get it to generate a song that follows the first simple prompt I thought of1. Aside from these specific errors, however, there is something much bigger missing.

Just as for AI-generated images, video, and literature, the songs seem fine from a distance. You might think, as I did: “the chords sound right, there’s a melody, there’s a beat, lyrics… I think this is music!” But then you listen closely, and something feels off. Despite some semblance of song structure and musicality, the songs are just shockingly boring. To the point where I would actively choose to listen to nothing over a Suno song. To see what I mean, take a listen to these songs in the “Suno Showcase”: vocal, instrumental. It’s weird, right? My theory is that we are being fooled by texture: the appearance of substance, filling space when needed, lacking deeper meaning. Maybe this works for muzak, or transition music for your favorite reality TV. But that’s the whole point in those settings: the music has no meaning! The songs generated by Suno sound, at best, as interesting as your average formulaic background music. And if you’re okay with that, then Suno is exactly the product you deserve!

But even if models were able to produce music with some substance beyond superficial texture, there’s still a problem. These types of models, i.e. deep learning models trained to predict the next token, rely on learned statistics to generate new content that looks close to what they’ve seen before. Like, really, really close2:

Because of phenomena like this, some call generative AI “approximate retrieval”3, and argue that rather than exhibiting “reasoning” or “creativity”, the model probably has just seen very similar examples before. Remember that these large models are trained on a huge percentage of the internet, and that the companies do not disclose their exact training sources. As long as you cannot prove that the generated output is not in the training data, it’s difficult to definitively show there is anything going on besides retrieval.

Okay, so we’ve established that generative AI does a pretty good job producing stuff that resembles what it’s seen before. I don’t know about you, but to me this is not an interesting enough application to be worth the hype and resources we’ve seen in the past few years. So what else is it good for?

A different approach to AI in the creative process is exemplified by Google’s Magenta team, which has been developing machine learning music tools since before 2016. They market the products (open source with many interfaces including an Ableton plugin) as creative tools for musicians, contrasting with standalone generative product like Suno, and Google’s recently-released MusicLM. I like Magenta’s angle! After all, music as we know today has evolved simultaneously with technological advancements: the gramophone, studio recordings, analog synthesizers, DAWs, and so on. If machine learning is the natural progression of technology, why can’t current music be affected by this?

As a side note, Magenta and other similar AI music tools haven’t really taken off, and my theory is that they don’t give musicians fine enough control over the process. The Ableton plugin is impressive and fun to play with, but there are so few parameters. There's no control over primitives like instrument family, articulation, etc., and that's frustrating if you have an idea in mind. The tone transfer tool is more low-level, and seems like it could be useful:

I think tools that help reduce friction for specific tasks are the best usage of generative models at the moment. They can be as basic as code generation to automate simple procedures, and I use Github’s Copilot every day. Or as impactful as tools to help people with disabilities. ElevenLabs, an AI voice startup, generated ClimateVoice founder Bill Weihl’s voice for a speech. Weihl’s speech is impaired because of ALS.

I genuinely think these applications are helpful (as long as they are not abused), and there should and will be more of them. But when reduced to tools, rather than full generation like Suno or Dall-E, can we really call these things AI anymore? I’d say they are really good machine learning tools, driven primarily by impressive engineering.

So then, what need is generative AI actually fulfilling? In an attempt to sell their products, tech companies have forced the narrative that generative AI will make our lives better. That we need new images to be generated constantly, or an infinite stream of customized songs, or a text summary of search results. But did they think to ask what people wanted? No, because the only purpose that generative AI actually serves is to increase shareholder value by being shoved into as many products as possible.

Let me suspend my cynicism for a moment and consider an example borrowed from a recent talk by computational neuroscientist Terry Sejnowski, though this is not a strictly generative model like the ones previously mentioned. In 2016, DeepMind’s AlphaGo beat top Go player Lee Sedol in four out of five games. It learned to play by seeing many past games, and through reinforcement learning by playing itself. During the second game against Sedol, it created a move that people had never seen before, which surprised experts. Could this be called creativity, or anything past approximate retrieval and pattern recognition? These cases give us clues to more complex phenomena in deep learning models, and maybe there is a glimmer of hope here for building some useful tools, or investigating cognition and intelligence in machine learning. Lots of people are studying this, but I love Melanie Mitchell’s work on machine understanding. I highly recommend her book and blog.

Okay, now to switch gears to the silly stuff. There are two weird niche corners that I do think have (superficial) uses for generative AI: transient social media art and dumb memes. On social media, things need not be high quality, or have any real structure or meaning; they just have to be able to attract and hold your attention for a moment. Recently on TikTok, I’ve been seeing this surreal AI-generated art called, hilariously, lobotomycore or liminalcore. Generally AI-generated art, and especially video, feels glossy and fake, but there’s something kind of comfortingly creepy about these videos. Maybe it’s the music or the lo-fi effect they have on the video (unclear if either are AI-generated). I think it works for this genre because common errors, like object consistency between frames or extra fingers, don’t hurt the final product. Also, social media requires a very short attention span without much structure, like the video equivalent of the transition music I was talking about earlier. Once again, it’s all just texture! And finding this niche genre that works with generative AI seems like the exception rather than the rule. Here’s an example:

Tiktok failed to load.

Tiktok failed to load.Enable 3rd party cookies or use another browser

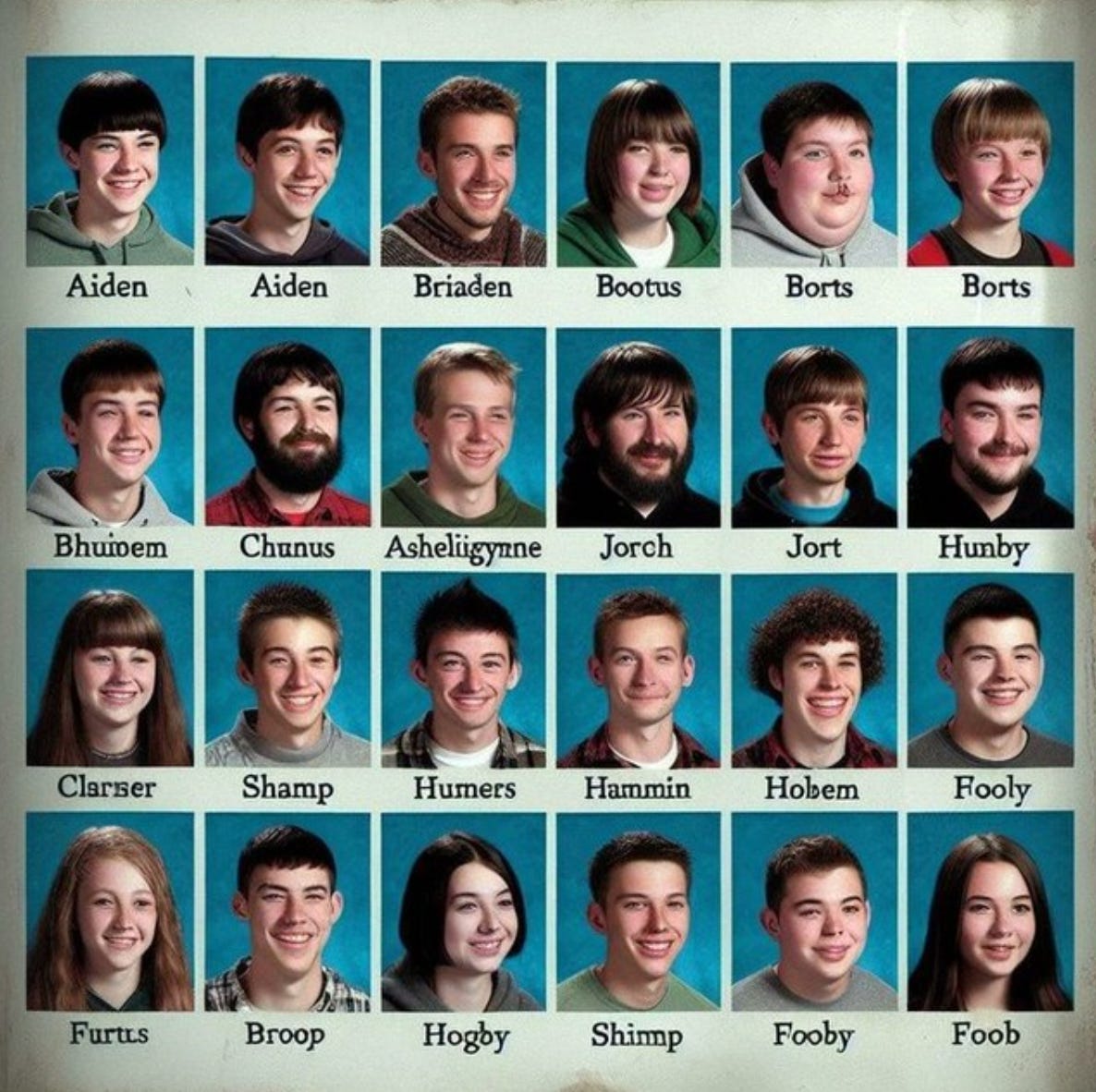

To be clear, I am not endorsing this as real art, just saying generative AI is a bit more suitable here than other “AI art” I’ve seen. As for memes, it’s easy: you can use the failures of AI as the joke. This AI-generated yearbook went viral last month.

And this one is not a meme, but has potential. My computer vision professor generated it and showed it in class. I couldn’t stop laughing.

A friend suggested that soon artists will start making real art that spoofs AI art. Maybe people already are already doing this, but it’s an amazing idea.

There’s one last point I want make in this rambling post. Generative AI, and AI in general, will continue to improve. Maybe one day there will actually be music and art that we find interesting. But we are more discerning than we think. Even now, just a couple of years after the initial ChatGPT release, our standards for generative AI are much higher. Once you’ve seen a few, it’s quite obvious when an image, text, audio, or video is AI-generated. Humans are moving targets, and just because AI is getting better does not mean that it is becoming more useful. People are worried about artists losing jobs, but paradoxically, will the over-hype just make human skills even more valuable?

Let’s keep thinking about these questions. I welcome your comments! Meanwhile, Big Tech will continue to force-feed us bad products we don’t need.

It’s too easy to create prompts that showcase failures, but this was literally my first attempt. My prompt was “a bach-like guitar piece” with the “Instrumental” switch turned on, and I got this.

Ed Newton-Rex, CEO of Fairly Trained and a vocal advocate for transparent data usage, writes about potential Suno data issues here, including a lot of specific examples of resemblance between Suno songs and real songs. I wrote in my last post that we should be highly critical of anyone with financial ties to the AI industry, but I think his analysis is informative and I generally agree with where he’s coming from.

AI expert and professor Melanie Mitchell writes about this in her recent blog post, and attributes the phrase to CS/AI Professor Subbarao Kambhampati.